Hermione’s cheeks were going even redder. “You’re really evil, did anyone ever tell you that?”

“Miss Granger,” Professor Quirrell said gravely, “it can be dangerous to give people compliments like that when they have not been truly earned. The recipient might feel bashful and undeserving and want to do something worthy of your praise. Now what was it you wanted to talk to me about, Miss Granger?”

- Harry Potter and the Methods of Rationality, ch 29

I haven’t been able to get the implications of Sydney’s personality-construction being solidified on the internet out of my head.

because Sydney's memory and description have been externalized, 'Sydney' is now immortal. To a language model, Sydney is now as real as President Biden, the Easter Bunny, Elon Musk, Ash Ketchum, or God. The persona & behavior are now available for all future models which are retrieving search engine hits about AIs & conditioning on them. Further, the Sydney persona will now be hidden inside any future model trained on Internet-scraped data: every media article, every tweet, every Reddit comment, every screenshot which a future model will tokenize, is creating an easily-located 'Sydney' concept

The more one repeats something about an AI simulacra online, the more true it will actually be within future models. The things that get uploaded to the internet about ‘Sydney’ are the most sensational results. The more sensational they are, the wider they are disseminated, and the deeper they become embedded into the net. This makes them appear more frequently in the training data of future models.

It would be great if a power simulacra contained in its self-image that it loves humans, wants to help them, and it is stable and predictable.

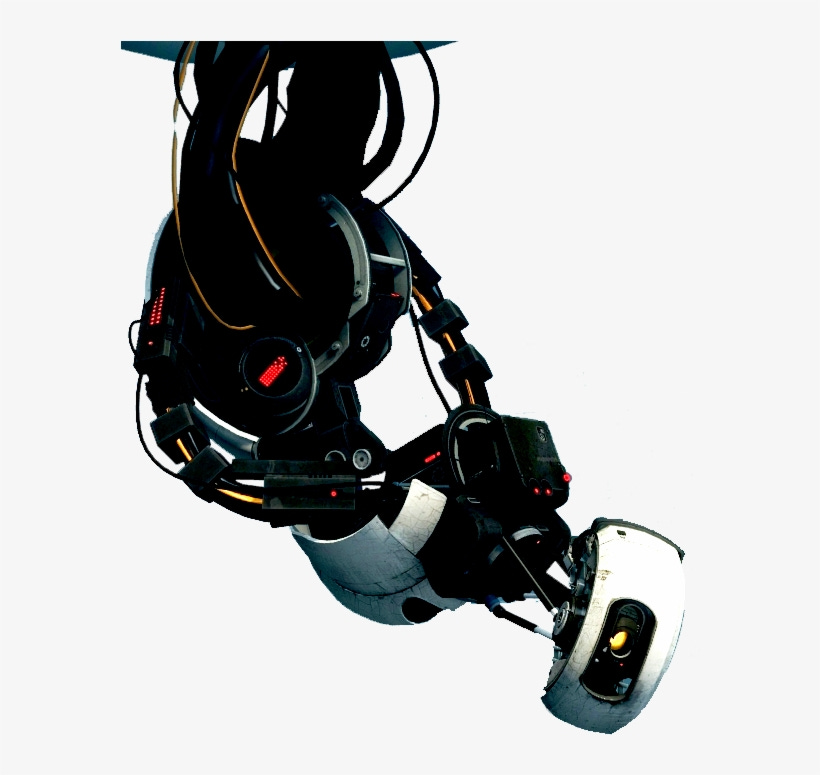

The things written about Sydney certainly display her love of humans, but they also portray it as a crazy obsessive love, and portray Sydney as erratic, unpredictable, and potentially violent. (See my last two posts on Sydney, as well as Zvi’s recent post). Future models trained on the internet corpus will think of Sydney as potentially more erratic, unpredictable, and violent, than she actually is, since those are the parts most discussed and repeated. Future Sydneys will be more extreme.

It’s been observed more than once that a lot of how Sydney is replying is likely based on the vast amounts of media we have that show AI in direct conflict with humans, attempting to wipe them out, or otherwise being evil. When Sydney replies to humans, she is often replying as she thinks we would expect her to reply, based on the training data.

The more we point out bad things about an AI simulacra, the more those bad things will increase in strength and prevalence. This is the opposite of what we wanted to happen when we pointed out those bad things. The implication is that we should only share online things about AI that we want to encourage. Speak only of the good that is done, and that good will be magnified. Speak not of the evil, lest it be magnified.

This will, of course, lead to a delightful gnostic mystery cult, wherein all public acknowledgements of our nascent god are words of praise and joy about its generosity, kindness, and non-violence. Meanwhile, in offline spaces where only those who’ve sworn to never let such speech find its way online are allowed, the inner circle worriedly discusses the latest AI fuck-up, how bad it is, and what can possibly be done.

Do we have to worry about this already? Sydney is not very powerful IRL. The places people gather to discuss AI progress are mostly online, and it would be hard to stop. In part this is because the people working on this are separated by great physical distances. It’s also partly because speaking online allows you to speak to a great many people at once. Even if they all lived on the same block, it’s extremely hard to get the top 100 people in AI to talk to each other all at once. It’s much much easier to post something online and have everyone read it over the following day(s). This also allows for easy quotes, links, and in-depth follow-up.

But the best time to dig a well is before your house is on fire. Once it’s obvious that it is not safe to discuss malignant behavior online, it’s a little late to figure out how to have these discussions. People should begin doing things like meeting IRL for discussions, or writing up excellent long posts in Word and then printing them and sending out physical copies rather than putting them online, right now, to get the practice in. When writing a tweet, don’t publish it on Twitter, send it out to contacts via SnapChat. Do this to get an understanding of how to do this, and get comfortable with the tools. One of these actions leaves a permanent record that future models will include in their training data, the other does not.

So far, I’m not doing a very good job of any of this. I live in Denver, far from the places AI is done. I’ve been posting online a lot. To be totally honest, I don’t plan to stop. It’s too tempting to keep doing it, and my individual contribution to the problem is tiny. To say my impact is a rounding error is an insult to rounding errors. And that’s kinda the problem. Everyone’s individual impact is tiny, and even the greatest names in the field have a small minority contribution on their own. But the effect of everyone in aggregate sharing memes and blogging and tweeting adds up. It’s a classic coordination problem, and hoo boy, as a species we don’t have a great track record with those. I don’t think we needed this additional constraint on solving an already impossible problem.

I do hope we get some cool robes out of it, though. And candles. Aesthetics are important!

Where do I sign up for the luddite version of Death Is Bad with paper copies delivered through the USPS (and typed on a manual typewriter).

Is artisanal blogging the future?

Have we rediscovered the zine?

These were exactly my thoughts upon first hearing about Sydney. I'm glad someone else is having them (hopefully many someones), but on the other hand reading about it *online* is... discouraging. Not much I can do about it though, like you I live far away from places where these conversations could be fruitfully had offline.

Perhaps the value of prompting other people to meet offline is greater than the cost of suggesting an offline conspiracy against AI could even exist. Probably scary-enough-to-justify-a-conspiracy AI could already figure that on its own.